Bitwise Operators For Algorithmic Efficiency

In the pursuit of writing efficient programs and algorithms, there are a host of techniques and strategies developers can employ, but I think one avenue that often is under appreciated or forgotten about entirely is the use of bitwise operators. Of course, thats not to imply they're always even relevant; the scope of the problem certainly has to be appropriate. However, when they are appropriate and leveraged strategically, they can lead to some ultra efficient algorithms.

Even if one doesn't ever encounter a use case for it, which is probably the overwhelming majority of developers, it is still fascinating to see how they can be used to solve certain problems.

Disclaimer

Blindly throwing bitwise operators in production code will very likely lead to major footguns, and possibly readability and maintability issues as well. Use with caution! Also, I don't think they're particular useful to ask about in a technical interview unless the job requires this kind of domain knowledge.

Example: Check If An Integer Is A Power Of Two

Suppose you were trying to write a function that checks whether or not an integer is a power of two. To be precise,

an integer, say a is a power of two if we can raise two to the power of another integer, say b , where b >= 0 to get a.

For instance, if a = 16, then our function should return true since we can find an integer (b = 4) that

satisfies the requirement (2^4 = 16). However if a = 6, then our function

should return false since no such b exists to satisfy the requirement.

To solve this problem, I think most people, including myself, would naturally come up with the following algorithm:

Starting from a, use a while loop to check that as long as a % 2 == 0, we can keep dividing a by

two until the condition becomes false. At the point in which it becomes false, a can either be 1 or some other value.

If it's 1 then we know that a is a power of two since we'd eventually reach a case where a == 2 and 2/2 == 1.

In all other situations, we wouldn't reach that base case, so we know it can't be a power of two.

The code might look something like this:

If we analyze the time complexity of the above algorithm, clearly it depends on the size of a. Though,

since at each step we're reducing the size by half, we can get away with saying that the running time

is roughly logarithmic.

Usually if we have an algorithm that has a logarithmic running time, we're quite happy and not really looking to make further improvements. We'll see very soon that we can actually improve upon this!

Using Bitwise Operators

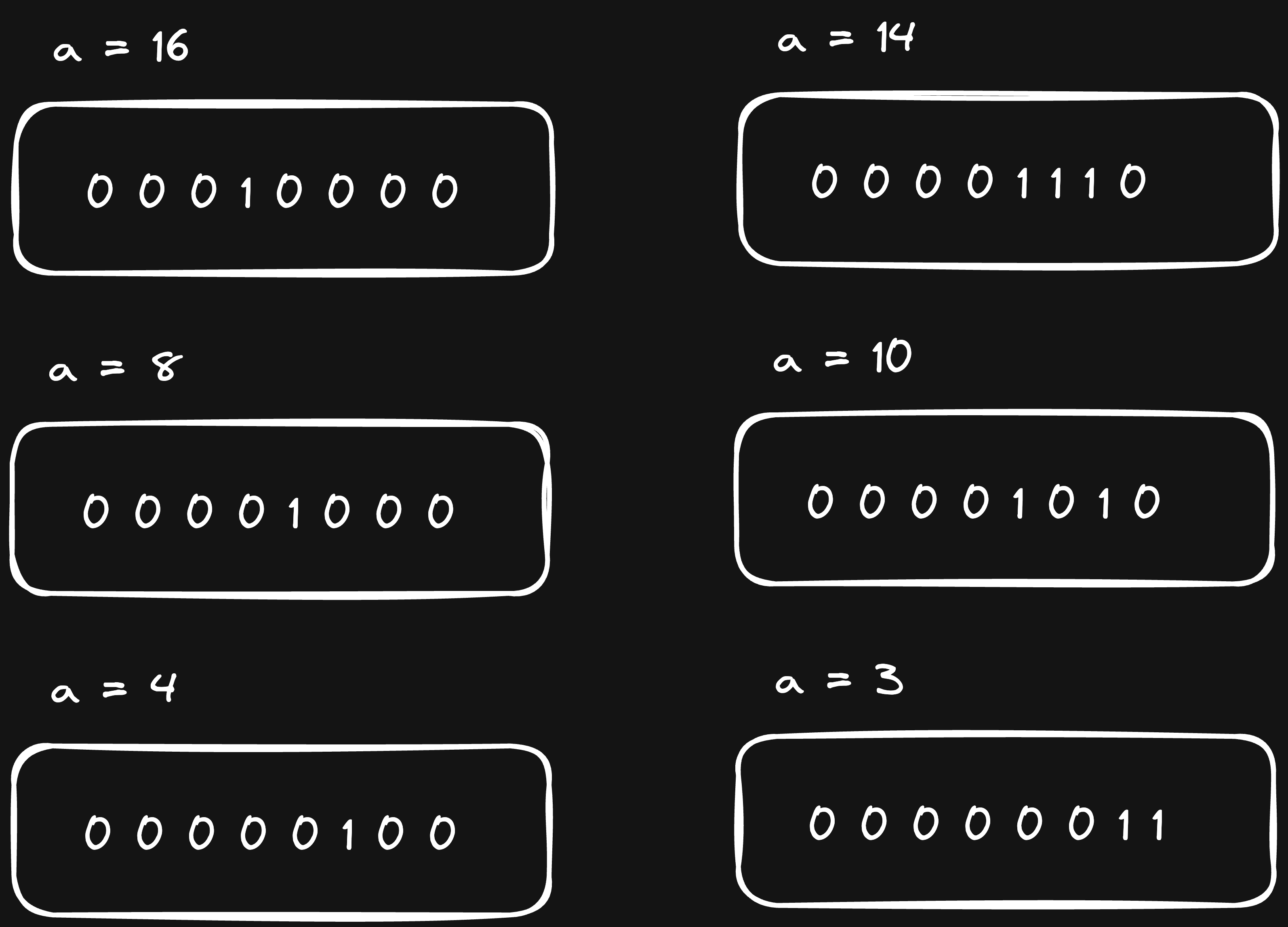

Before diving into which bitwise operators we will use and how we will use them to solve the problem, lets first look at the binary representation (using 8 bits) of a few numbers to see if we notice any patterns:

In the left column, are all numbers that are valid powers of two per the requirement. Notice that they all share something in common: There is only a single bit that is 1. This makes sense too since after all, it is a power of two!

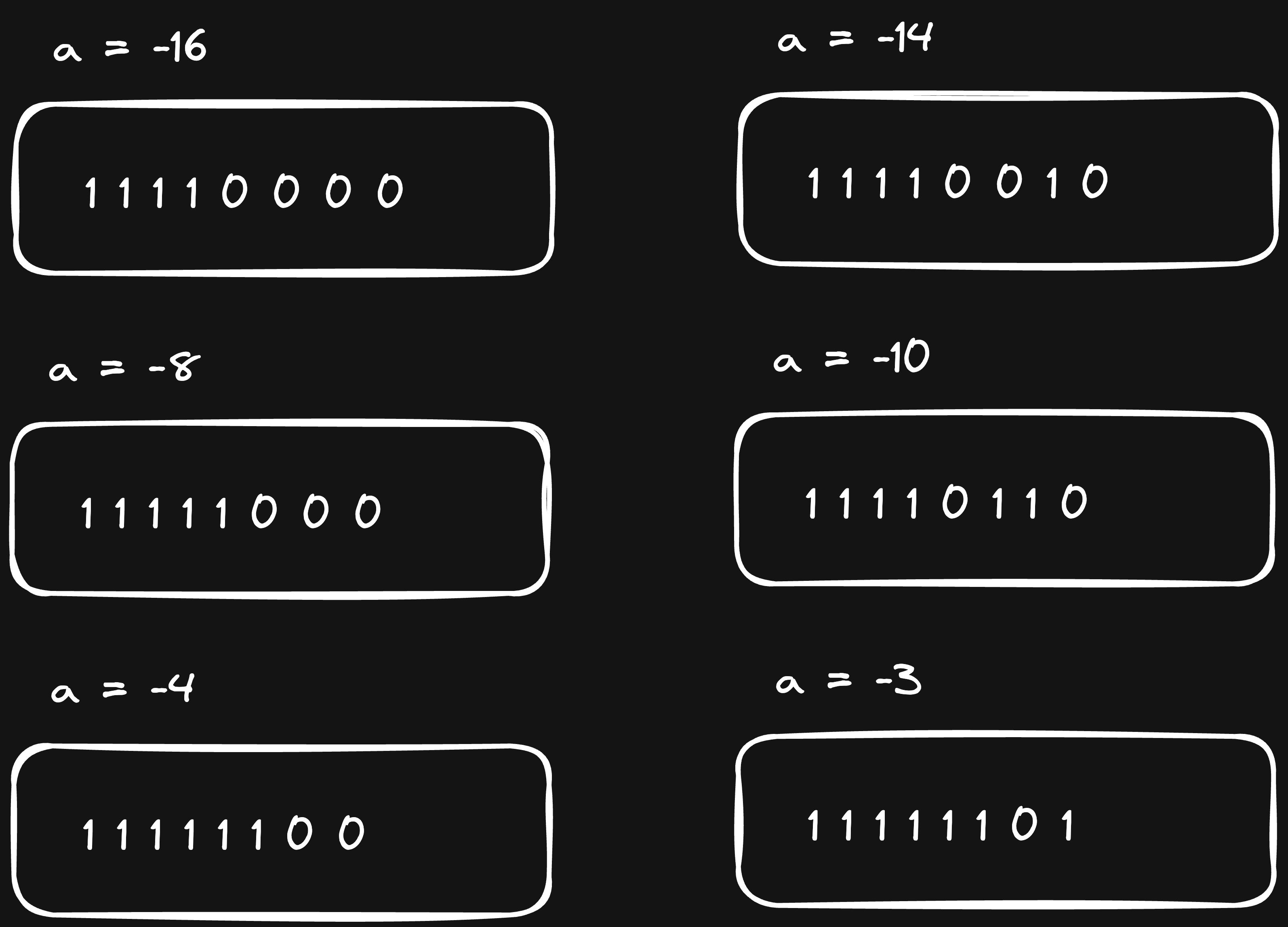

Next, lets look at the corresponding negative representation for each of the above with the leading bit indicating the sign (-128):

Observe for the numbers in the left column that the right most 1 bit is in the same position as the positive representation for that number. For the numbers in the right column that is not the case. In fact, in the left column, when comparing with the positive representation, all of the bits to the left of the 1 bit are exactly inverted. This isn't just a coincidence either, its due to two's complement.

Two's complement is a very common way computers represent signed integers. To compute the two's complement of an integer, invert all the bits (bitwise NOT) and add one. For powers of two, there is a single bit with the value of 1 and the rest are 0. For such numbers this means inverting all the bits would turn that position to 0 and the rest would become 1. Then, when 1 is added to the result, we perform a carry in every position until we reach the position holding 0, which then becomes 1 and then the following bits become 1. Hence, all numbers that are powers of two behave this way.

How does this help us? Well, looking closely at the negative representation of the powers of two with the positive one, if we take the bitwise AND of those two numbers, the result would give us back the positive one. This means an integer is a power of two when this is true!

The Algorithm

Given the above, the algorithm is quite straightforward. If the bitwise AND between an integer a and its negative

counterpart -a is equal to a, then we can say a is a power of two:

In terms of performance, the running time is constant since it does not depend on the size of the integer. Compared to the previous algorithm, this is a massive improvement.